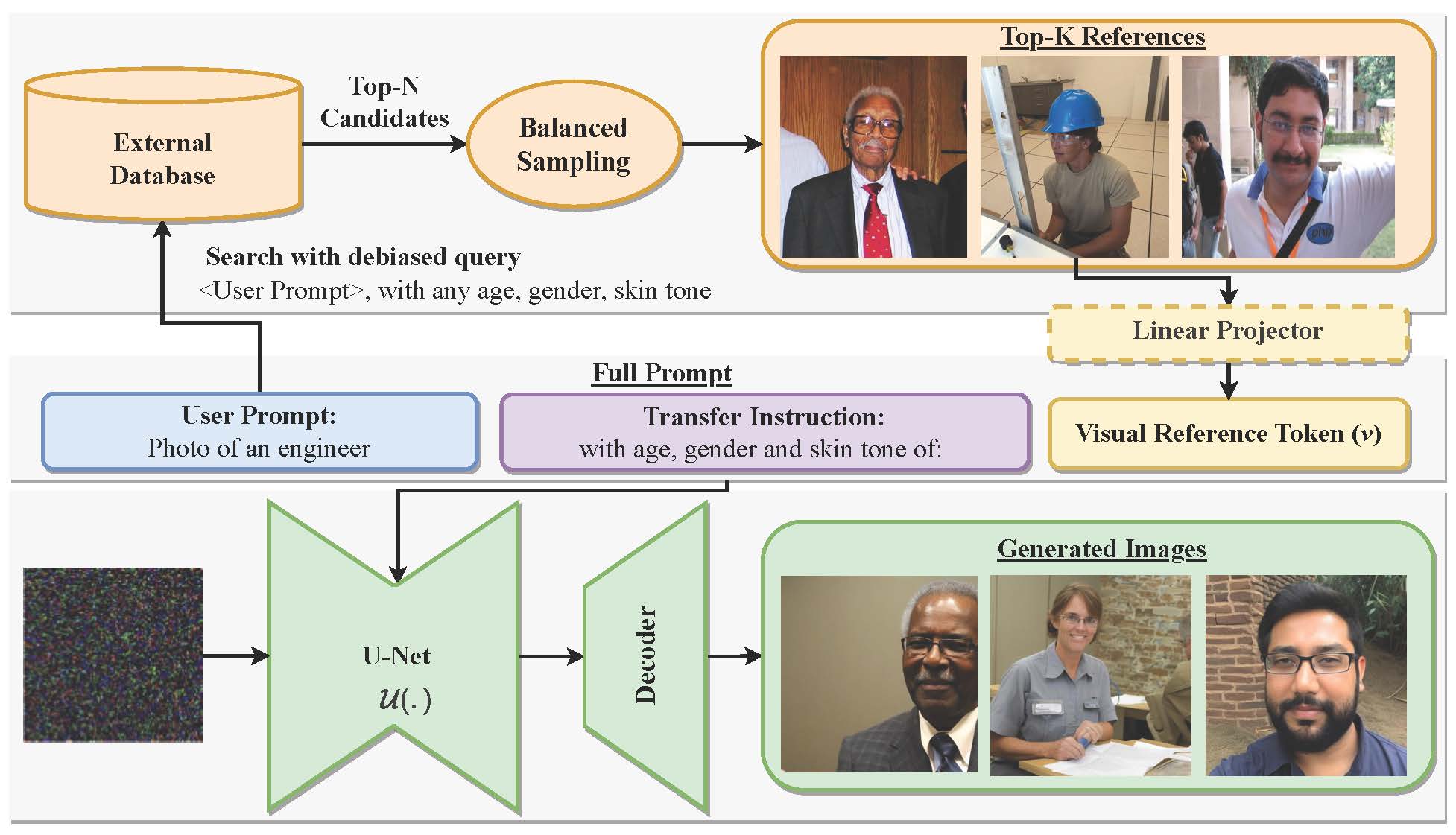

@article{shrestha2024fairrag,

title={FairRAG: Fair Human Generation via Fair Retrieval Augmentation},

author={Shrestha, Robik and Zou, Yang and Chen, Qiuyu and Li, Zhiheng and Xie, Yusheng and Deng, Siqi},

journal={CVPR},

year={2024}

}

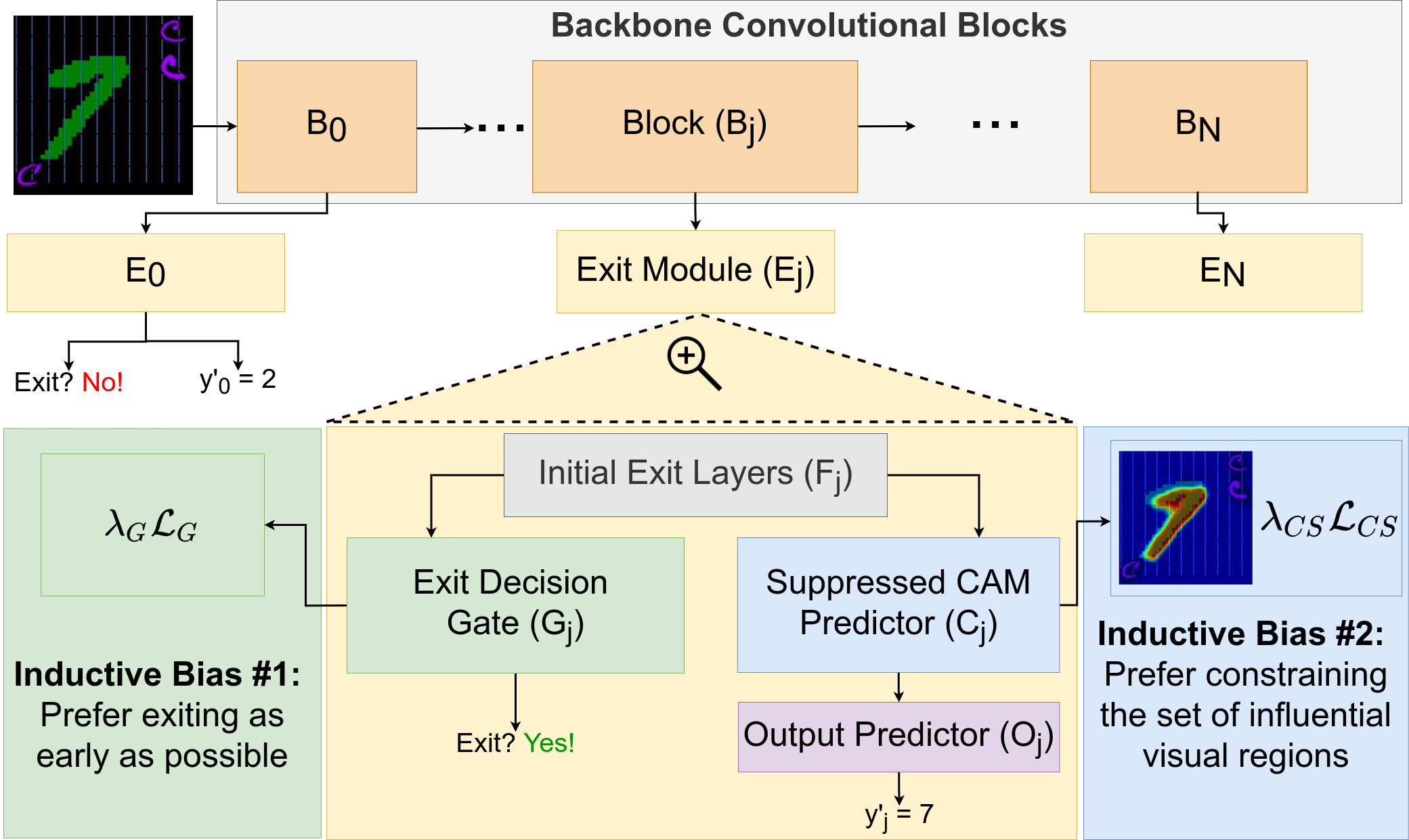

@article{shrestha2022occamnets,

title={OccamNets: Mitigating Dataset Bias by Favoring Simpler Hypotheses},

author={Shrestha, Robik and Kafle, Kushal and Kanan, Christopher},

booktitle={European Conference on Computer Vision (ECCV)},

year={2022}

}

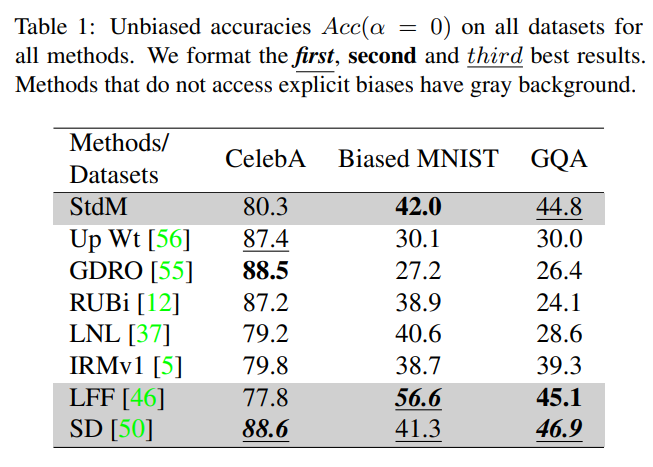

@article{shrestha2021investigation,

title={An investigation of critical issues in bias mitigation techniques},

author={Shrestha, Robik and Kafle, Kushal and Kanan, Christopher},

journal={Workshop on Applications of Computer Vision},

year={2021}

}

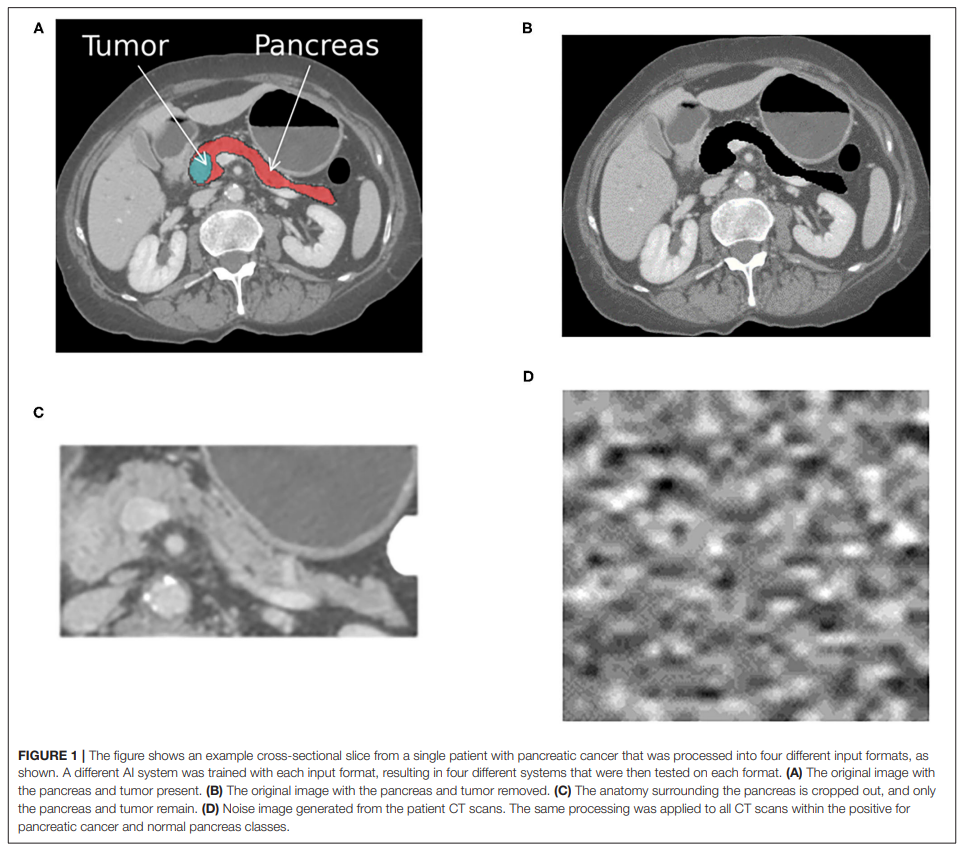

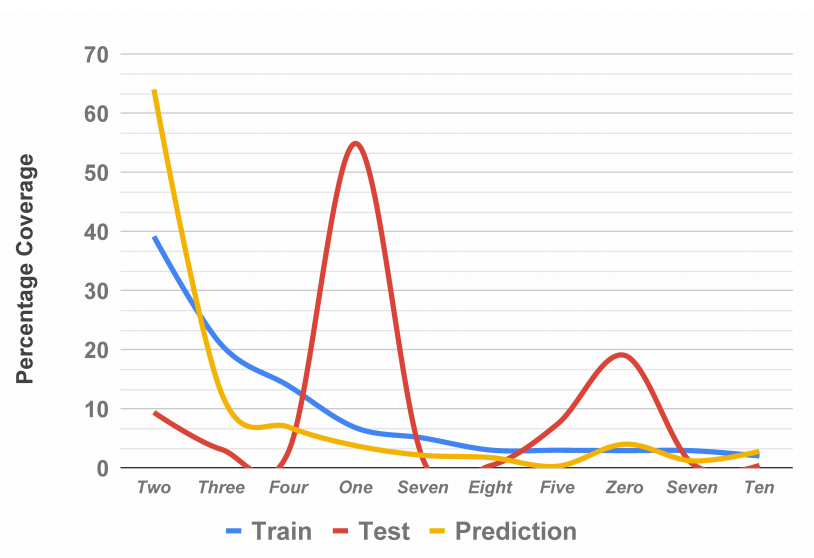

@ARTICLE{10.3389/fdgth.2021.671015,

AUTHOR={Mahmood, Usman and Shrestha, Robik and Bates, David D. B. and Mannelli, Lorenzo and Corrias, Giuseppe and Erdi, Yusuf Emre and Kanan, Christopher},

TITLE={Detecting Spurious Correlations With Sanity Tests for Artificial Intelligence Guided Radiology Systems},

JOURNAL={Frontiers in Digital Health},

VOLUME={3},

YEAR={2021},

URL={https://www.frontiersin.org/article/10.3389/fdgth.2021.671015},

DOI={10.3389/fdgth.2021.671015},

ISSN={2673-253X},

ABSTRACT={Artificial intelligence (AI) has been successful at solving numerous problems in machine perception. In radiology, AI systems are rapidly evolving and show progress in guiding treatment decisions, diagnosing, localizing disease on medical images, and improving radiologists' efficiency. A critical component to deploying AI in radiology is to gain confidence in a developed system's efficacy and safety. The current gold standard approach is to conduct an analytical validation of performance on a generalization dataset from one or more institutions, followed by a clinical validation study of the system's efficacy during deployment. Clinical validation studies are time-consuming, and best practices dictate limited re-use of analytical validation data, so it is ideal to know ahead of time if a system is likely to fail analytical or clinical validation. In this paper, we describe a series of sanity tests to identify when a system performs well on development data for the wrong reasons. We illustrate the sanity tests' value by designing a deep learning system to classify pancreatic cancer seen in computed tomography scans.}

}

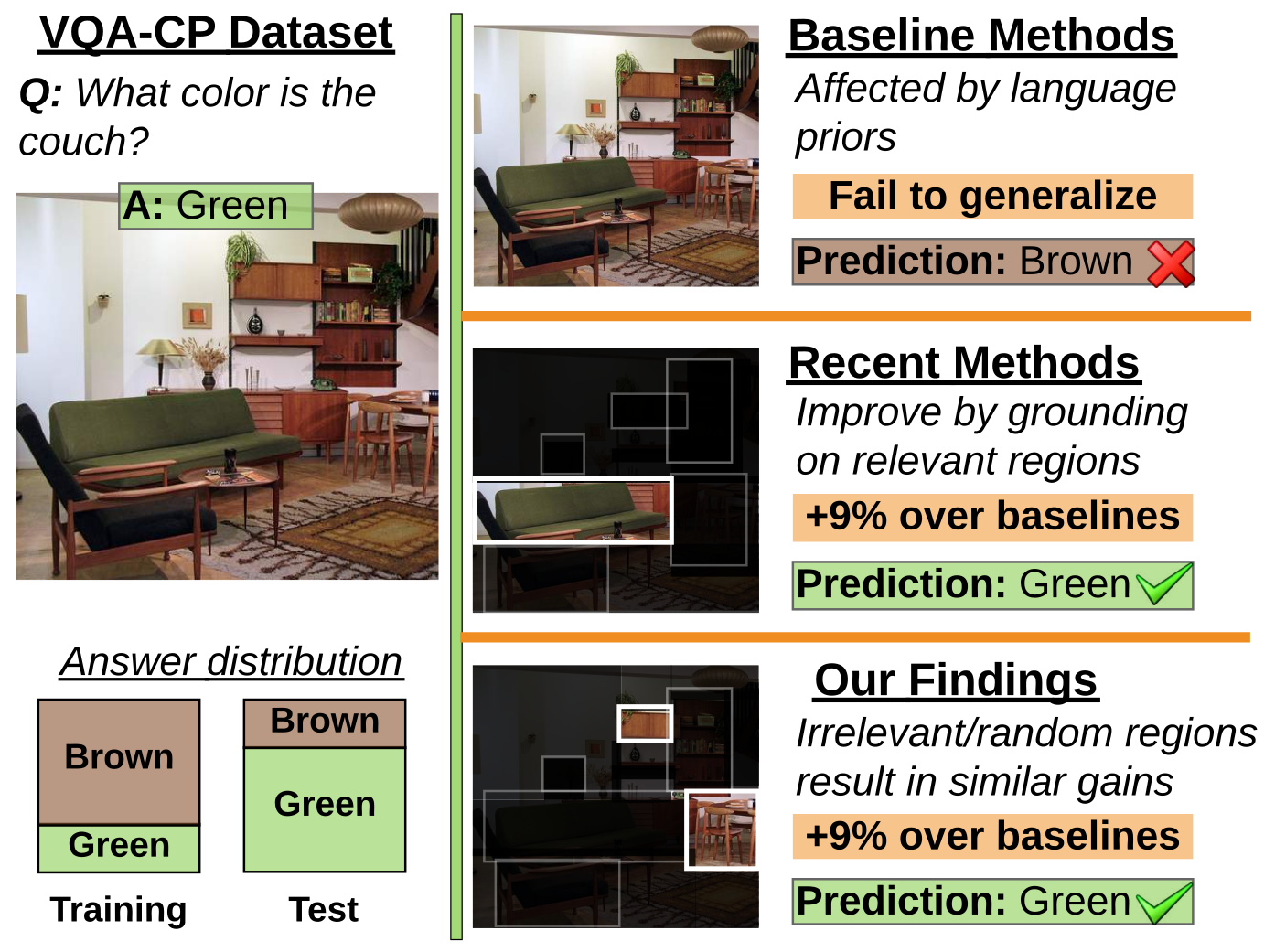

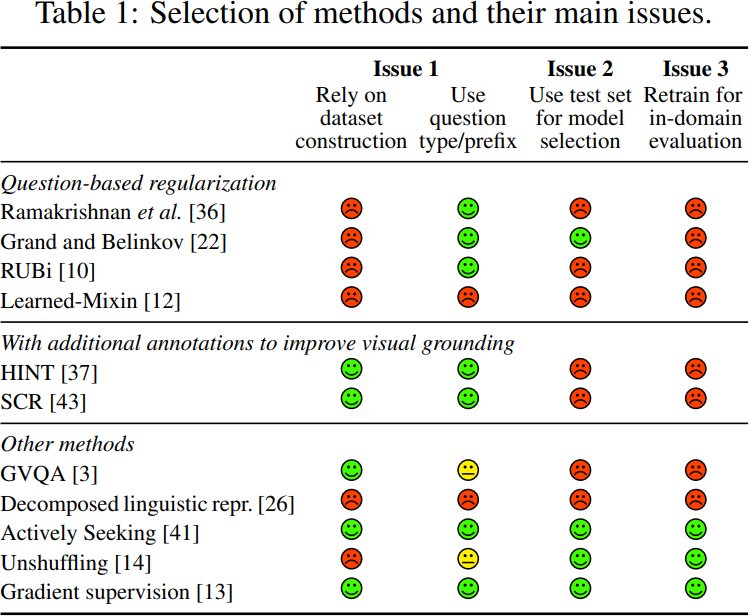

@inproceedings{shrestha-etal-2020-negative,

title = "A negative case analysis of visual grounding methods for {VQA}",

author = "Shrestha, Robik and

Kafle, Kushal and

Kanan, Christopher",

booktitle = "Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics",

month = jul,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.acl-main.727",

pages = "8172--8181"

}

@article{teney2020value,

title={On the Value of Out-of-Distribution Testing: An Example of Goodhart's Law},

author={Teney, Damien and Kafle, Kushal and Shrestha, Robik and Abbasnejad, Ehsan and Kanan, Christopher and Hengel, Anton van den},

booktitle={Advances in neural information processing systems (NeurIPS)},

year={2020}

}

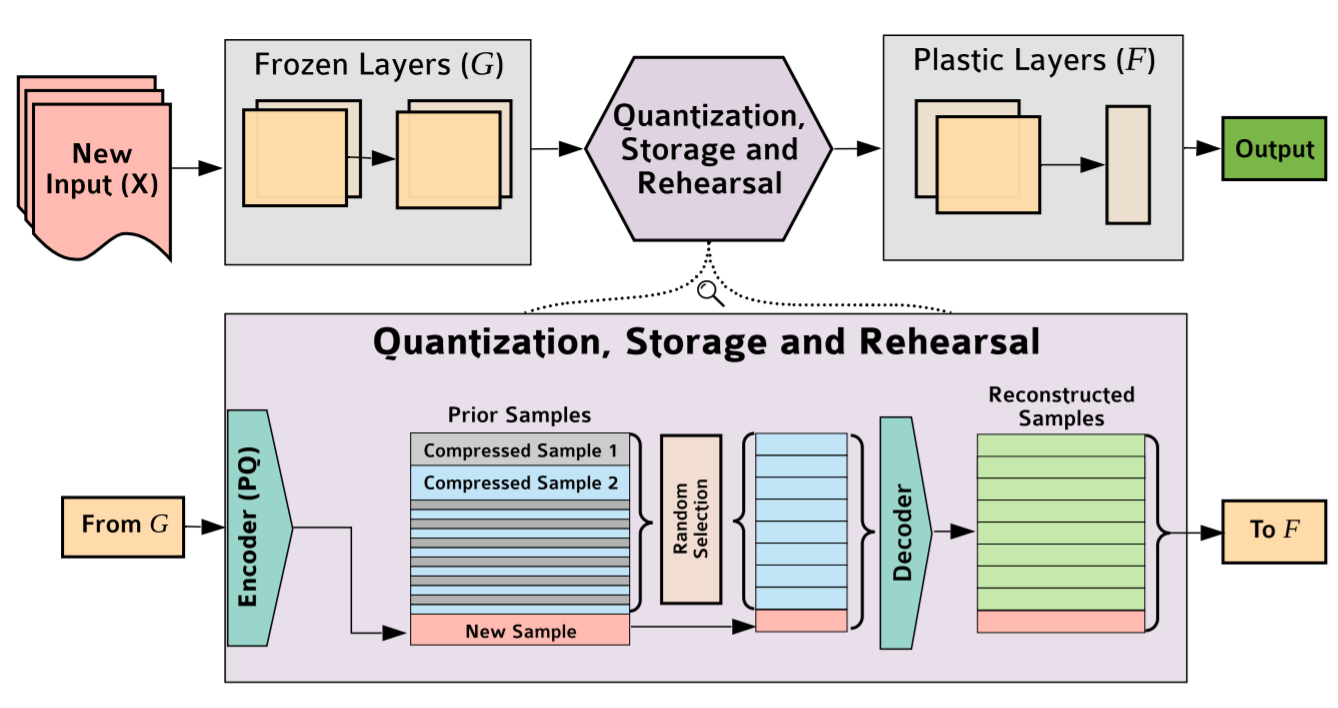

@article{hayes2019remind,

title={REMIND Your Neural Network to Prevent Catastrophic Forgetting},

author={Hayes, Tyler L and Kafle, Kushal and Shrestha, Robik and Acharya, Manoj and Kanan, Christopher},

journal={arXiv preprint arXiv:1910.02509},

year={2019}

}

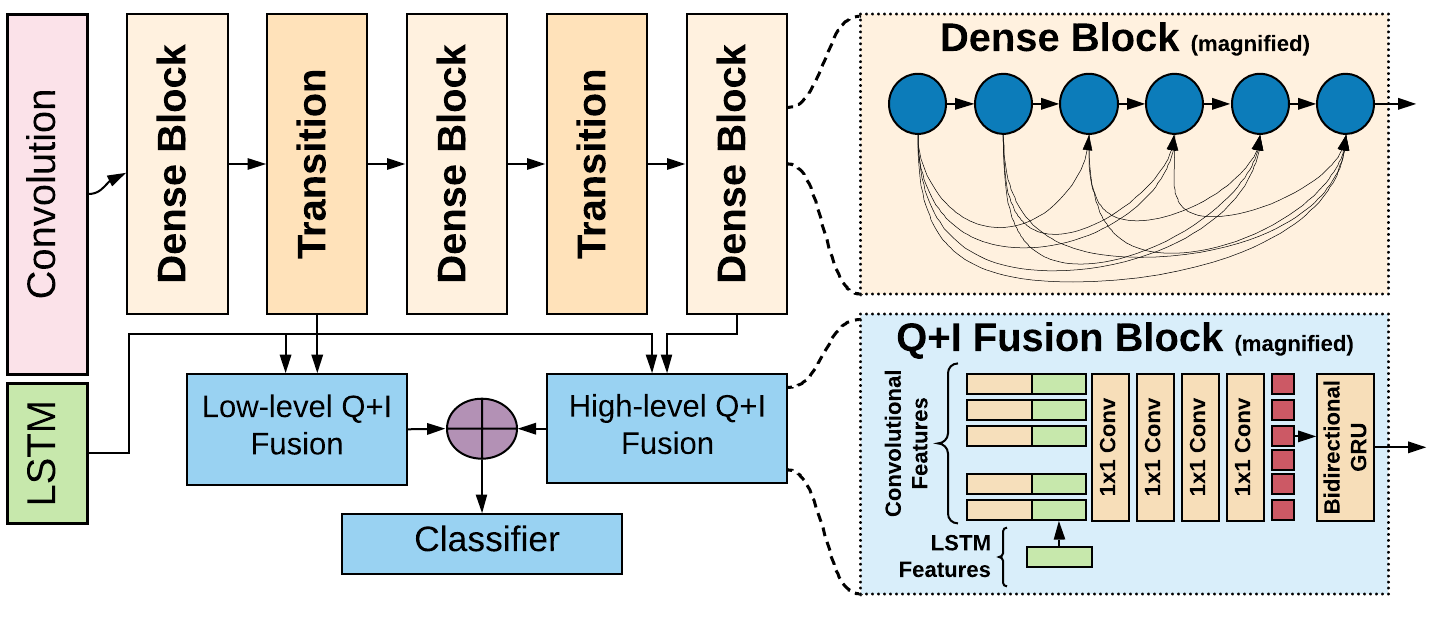

@inproceedings{kafle2020answering,

title={Answering Questions about Data Visualizations using Efficient Bimodal Fusion},

author={Kafle, Kushal and Shrestha, Robik and Cohen, Scott and Price, Brian and Kanan, Christopher},

booktitle={The IEEE Winter Conference on Applications of Computer Vision},

pages={1498--1507},

year={2020}

}}

@article{kafle2019challenges,

title={Challenges and Prospects in Vision and Language Research},

author={Kafle, Kushal and Shrestha, Robik and Kanan, Christopher},

journal={arXiv preprint arXiv:1904.09317},

year={2019}

}

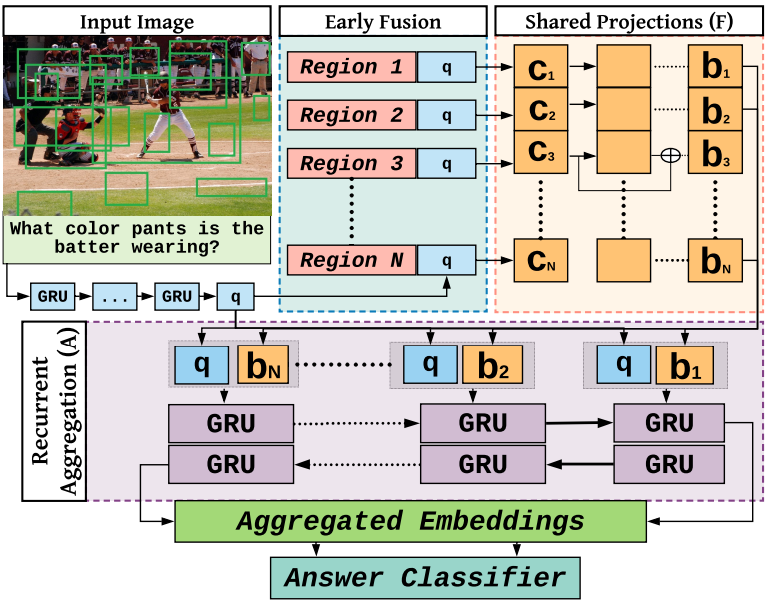

@inproceedings{shrestha2019ramen,

title={Answer Them All! Toward Universal Visual Question Answering Models},

author={Shrestha, Robik and Kafle, Kushal and Kanan, Christopher},

booktitle={CVPR},

year={2019}

}